[마루에서 만난 사람] 김연석 제틱에이아이 대표 “AI 기업을 위한 원스톱 온디바이스 AI 전환 솔루션을 만들고 있습니다”

Tech42

2024. 12. 19.

퀄컴 AI 연구소에서 6년여 간 온디바이스 AI 연구·개발… ‘AI 기반 음성인식 모듈’ 개발하기도

GPU 클라우드 기반 AI 서비스 원스톱으로 온디바이스 AI 전환해 주는 제틱 멜란지(ZETIC.MLange)' 선보여

NPU 호환성 문제 해결…어떤 운영체제, 프로세서, 기기에서도 적용 가능한 AI 서비스 개발 물꼬

NPU 기반의 온디바이스 AI는 스마트폰, 스마트 스피커, 웨어러블 디바이스 등 다양한 분야에서 혁신적인 애플리케이션을 촉진하고 있다. 앞으로 N온디바이스 AI는 자율주행차, 스마트홈, 헬스케어 분야 등에서도 그 중요성이 커질 것으로 예상된다. (이미지=퓰리처AI 생성)

지난 6월 애플은 자사 아이폰에 온디바이스 AI를 적용한 ‘애플 인텔리전스’를 선보였다. 그에 앞서 삼성전자는 안드로이드 기반의 자사 갤럭시S24에 온디바이스 AI 기능을 탑재해 성공을 거둔 바 있다.

최근까지 AI 기술은 거대언어모델(LLM), 생성형 AI 등 엄청난 자본이 투입되는 방식으로 관심이 집중됐다. 하지만 올해부터 투자 비용 대비 뚜렷한 수익 모델이 나오지 않고 있다는 지적이 제기되며 거품 논란이 일었다. 이제까지 AI 개발에 적용된 GPU(Graphics Processing Unit, 그래픽 처리 장치) 인프라 구축과 운용에 막대한 비용이 들어가는 것이 문제였다.

이에 대안으로 등장한 것이 NPU(Neural Network Processing Unit, 신경망처리장치 소위 AI칩으로 불림)를 활용한 온디바이스 AI이다. 온디바이스 AI는 클라우드로 데이터를 전송하지 않고 자체적으로 AI 연산을 처리하는 기술이다. 인터넷 연결이 필요하지 않아 연산 속도가 빠르고 개인 정보 보호에도 강점을 가지고 있다. 특히 서버를 사용하지 않아 비용을 획기적으로 줄일 수 있다.

이렇듯 모바일, 노트북을 시작으로 다양한 기기에 NPU 적용이 늘어날 것으로 전망되는 가운데, AI 업계에서는 온디바이스 AI 기반 서비스 개발에 나서는 기업들이 최근 늘어나는 추세다. 하지만 기존 GPU 클라우드 기반 AI 서비스 운영 방식이 온디바이스 AI 서비스로 전환하는 데는 몇 가지 큰 문제가 걸림돌로 작용했다.

우선 온디바이스 AI의 기반이 되는 NPU의 낮은 호환성을 꼽을 수 있다. NPU 시장의 40%를 점유하고 있는 미디어텍을 비롯해 애플, 퀄컴, 삼성 등이 개발한 NPU는 AI 서비스 개발에 적용되는 프레임워크가 서로 다르다. 즉 AI 애플리케이션을 만들려면 각 NPU 별 최적화된 버전을 만들어야 한다는 말이다. 시장이 급변하는 상황에서 전문가가 부족하다는 점도 문제다.

이러한 상황에서 모바일 AI 서비스의 온디바이스 전환을 지원하는 솔루션을 선보인 스타트업이 등장해 기대를 모으고 있다. 퀄컴 출신 김연석 대표가 창업한 제틱에이아이가 그 주인공이다.

퀄컴에서 6년여, 온디바이스 AI 전문가가 설립한 스타트업

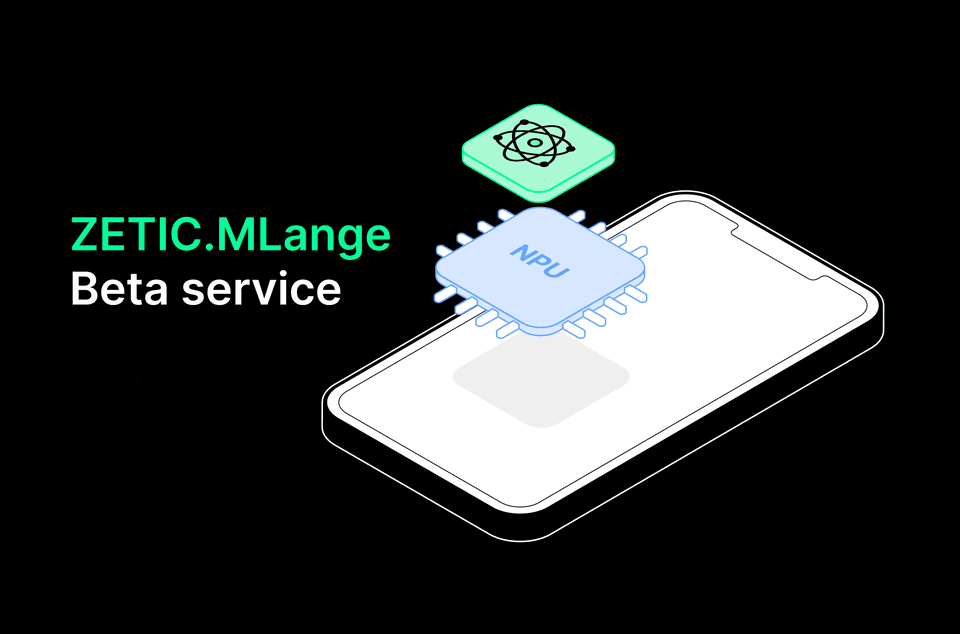

멜란지는 기존 AI 모델(STT, TTS, 세그멘테이션, 생성AI 등)을 온타깃 AI 모델 라이브러리로 구현해 온디바이스 AI로 통합하는 자동화 솔루션이다.

올해 3월 설립된 제틱에이아이는 설립 4개월 만인 지난 7월 온디바이스 AI 통합 솔루션 '제틱 멜란지(ZETIC.MLange, 이하 멜란지)'의 베타 버전을 론칭했다. 멜란지는 기존 AI 모델(STT, TTS, 세그멘테이션, 생성AI 등)을 온타깃 AI 모델 라이브러리로 구현해 온디바이스 AI로 통합하는 자동화 솔루션이다.

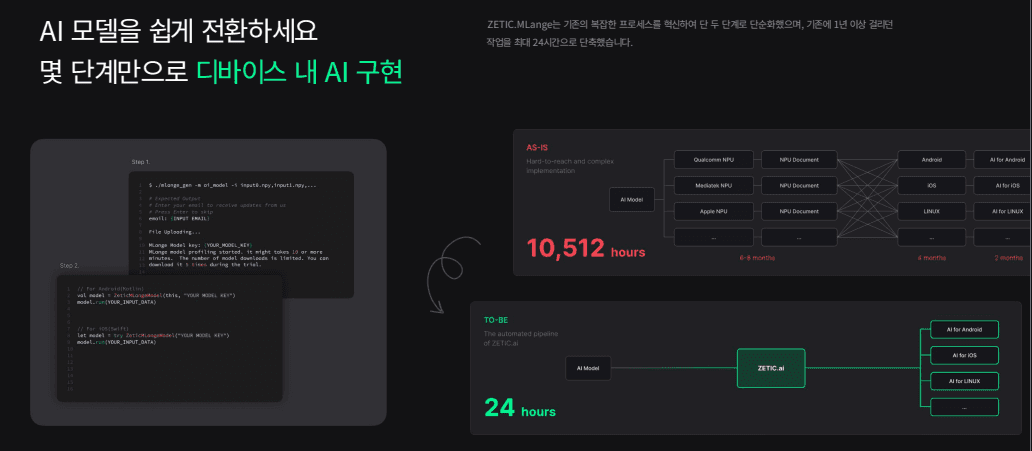

AI 서비스 기업이 멜란지를 적용할 경우 서비스 연산을 온디바이스로 내재화해 서버 비용을 아낄 수 있다. 또 모든 운영체제와 프로세서, 기기를 대상으로 AI 서비스를 위한 애플리케이션을 쉽게 개발할 수 있다. 특히 제틱에이아이는 메란지 베타 버전에서부터 안드로이드, iOS, 리눅스 등의 운영체제 지원은 물론 미디어텍, 퀄컴, 애플 등 주요 AI칩 개발사의 NPU를 지원을 하고 있다. 제틱에이아이에 따르면 AI 모델을 갖춘 기업이라면 별도의 엔지니어링 작업 없이 24시간 내 어느 기기에도 적용 가능한 온디바이스 AI 애플리케이션을 만들 수 있다.

창업 이후 이렇듯 빠른 속도로 솔루션을 개발하고 선보일 수 있었던 것은 지난 2017년부터 창업 전까지 퀄컴 AI 연구소(Qualcomm AI Research Lab)에 몸담으며 NPU와 온디바이스 AI 개발에 전념했던 김연석 대표의 기술력 덕분이다. 퀄컴에서 김 대표는 NPU를 활용한 AI 프레임워크를 비롯해 Qualcomm On-Device AI 툴킷 등을 개발하며 독보적인 온디바이스 AI 전문성을 구축했다. 아마존을 비롯해 다양한 기업들의 AI 스피커에 내장된 퀄컴칩의 음성인식 모듈도 그의 작품이다.

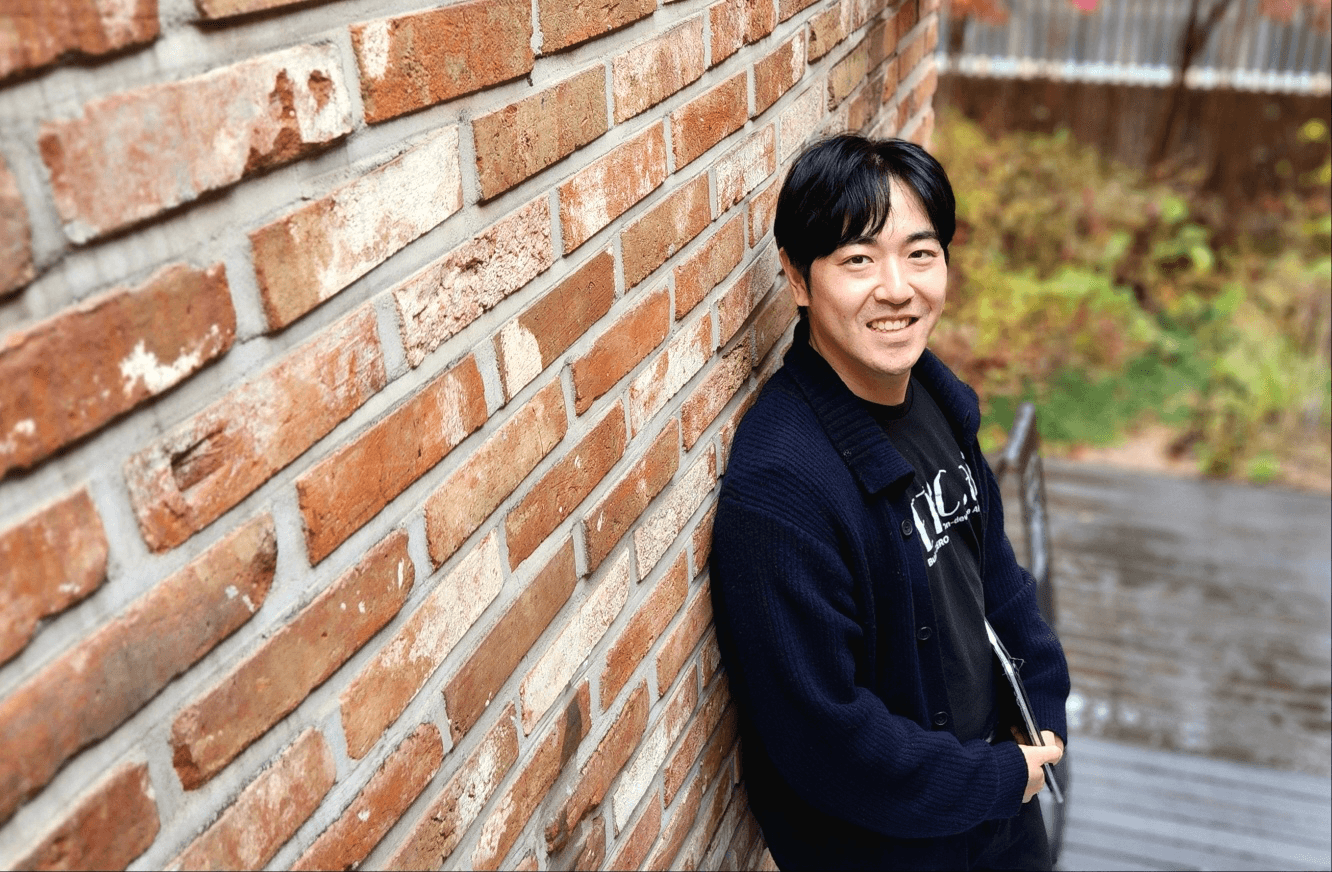

김연석 제틱에이아이 대표는 퀄컴에서 NPU를 활용한 AI 프레임워크를 비롯해 Qualcomm On-Device AI 툴킷 등을 개발하며 독보적인 온디바이스 AI 전문성을 구축했다. 아마존을 비롯해 다양한 기업들의 AI 스피커에 내장된 퀄컴칩의 음성인식 모듈도 그의 작품이다. (사진=테크42)

최근 아산나눔재단의 창업가 플랫폼 마루180에 새롭게 둥지를 튼 제틱에이아이 사무실에서 만난 김 대표는 ‘운이 좋았다’는 말로 퀄컴 재직 당시를 돌이켰다.

“제가 퀄컴에 입사할 당시는 AI를 전공한 사람들이 별로 없었어요. 저 역시 소프트웨어 엔지니어로 입사에 소프트웨어 관점에서 AI에 접근하는 프레임워크를 만들었죠. 또 당시에는 임배디드 AI라 불린 온디바이스 AI는 GPU 기반의 대규모 학습이 트렌드였던 AI 개발 업계에서 별로 관심을 받지 못한 것도 있고요. 하지만 제 경우는 온디바이스 AI에 시장이 열릴 거라고 예상했죠. 사실은 내년 정도가 될 거라고 봤는데 좀 더 일찍 온 셈이예요. 그 이유는 당연하게도 AI 기술의 이면에 자리잡은 비용 문제가 크죠. 온디바이스의 가장 큰 장점들은 역시 서버를 돌리지 않는 것에서 비롯됐으니까요.”

시장이 열리는 상황에서 김 대표는 퀄컴 NPU 전용 온디바이스 AI 개발이라는 한계를 넘어 모든 NPU에 적용 가능한 범용 AI 프레임워크 솔루션 개발을 구상하게 됐다. 엔비디아가 GPU 시장에서 지배적인 사업자로 위상을 떨치는데 비해 모바일 NPU 시장의 경우 지배적 사업자가 없다는 점도 그의 창업동기가 됐다.

“모바일 AI칩의 경우 전체 시장을 보면 40%를 점유하고 있는 미디어텍을 필두로 퀄컴과 애플이 20%씩, 삼성 엑시노스가 6% 정도로 나눠 점유하고 있어요. 이러한 상황에서 퀄컴에서 아무리 잘한다고 해도 파이는 이미 정해져 있는 거였죠. 이러한 시장의 한계를 깰 수 있는 AI 소프트웨어를 만들어야겠다는 생각을 한 것이 창업으로 이어진 셈이예요.”

온디바이스 AI 솔루션으로 비범용 AI 프레임워크 기반 SoC 업계 판도 바꿀 것

앞서 언급한 바와 같이 제틱에이아이의 멜란지는 안드로이드 기기에 주로 탑재되는 퀄컴사의 NPU 뿐아니라 애플의 A시리즈, M시리즈에도 동작해 NPU의 사용 성능을 극대화하는 것이 특징이다. AI 기업들은 멜란지에 자신의 AI 모델과 데이터만 입력하면 된다. 이후에는 자동으로 최적화 과정을 거쳐 안드로이드와 iOS 디바이스에 탑재된 NPU에 맞는 소프트웨어 라이브러리로 변환된다. 이때 기존 GPU 클라우드로 진행되던 서버 작업은 모두 내부 라이브러리 작업으로 대체된다. 서버 비용이 제로가 되는 셈이다. 김 대표는 “헬스케어 등 민감한 개인정보를 다루는 AI 애플리케이션 개발에서 서버를 돌리지 않는다는 것은 큰 장점이 될 것”이라며 말을 이어갔다.

“기존 애플리케이션 구동 방식을 보면 데이터를 통째로 기업 서버로 보내 가공 후 사용자에게 서비스하는 프로세스죠. 이때 문제가 되는 건 단순히 비용을 넘어 개인정보 관리라고 할 수 있어요. 기업들이 관리하지 않는 순간 개인정보 유출 등의 문제가 발생할 여지가 적지 않은데 지금은 AI라는 테마에 묻혀 공론화되지 않은 것 뿐이거든요. 온디바이스 AI는 그런 유출 우려 자체를 원천적으로 차단할 수 있다는 것이 또 다른 장점이죠.”

AI 모델을 갖춘 기업이라면 멜란지를 활용해 별도의 엔지니어링 작업 없이 24시간 내 어느 기기에도 적용 가능한 온디바이스 AI 애플리케이션을 만들 수 있다. (이미지=제틱에이아이)

‘모든 NPU를 활용할 수 있는 온디바이스 AI 구축을 지원하는 솔루션’을 지향한다는 점에서 멜란지는 다양한 비범용 AI 프레임워크가 적용된 기존 SoC(System on Chip, 단일칩체제) 생태계의 판도를 바꿀 것으로 기대를 모으고 있다. 김 대표 역시 “하드웨어 회사들과 이권이 얽히지 않은 독립적인 방식으로 가려고 한다”며 제틱에이아이의 방향성을 설명했다.

“AI 기업들이 온디바이스 AI 시장으로 들어오려면 기존에는 하드웨어를 선택하는 것 부터가 문제였어요. 만약 특정 하드웨어 회사와 문제가 있으면 그 회사의 NPU가 적용된 기기에서 새로운 서비스를 서기에는 껄끄러운 상황이 되거든요. 저희 역시 NPU 제조사들과 가능한 독립적인 관계로 가려고 노력 중이예요. 뭔가 새로운 것으로 바꾼다기보다는 기존의 하드웨어를 활용할 수 있는 AI 기술로 가는 것이 저희 방향성이죠. 그래서 우선은 좀 더 명확하게 모바일 온디바이스 AI에 집중하는 것이고요. 지금 당장 모두가 들고 있는 스마트폰에 AI를 넣을 수 있도록 하겠다는 것이 저희 목표죠.”

멜란지는 현재 베타 버전 단계에서도 전 세계에 보급된 모바일 NPU의 80%에 적용 가능한 수준이다. 향후에는 아직 지원되지 않은 나머지 20%를 채워 나가는 것이 목표다. 궁극에는 NPU가 적용된 세상의 모든 기기에서 동작하는 온디바이스 AI 서비스를 지원하겠다는 것이다. 김 대표는 그 시점을 언급하며 ‘공존하는 생태계’에 대한 구상을 털어놨다.

“내년 초 정식 버전 출시 전까지 테스트 케이스를 많이 쌓으면서 완성도를 높여가고 있어요. 보통 AI 소프트웨어가 성공해서 업계에 유명한 플레이어로 등극하면 하드웨어 회사들이 들어오고 싶어하는 포지션이 되더군요. 그런 AI 소프트웨어로 굳건하게 자리잡으면 하드웨어 회사들에게 의존하지 않아도 그들이 저희와 같이 생태계를 만들어 나가는데 집중할 거라고 생각해요. 그렇게 된다면 저희는 AI 시장에서 AI 서비스를 만드는데 누구나 당연하게 활용하는 표준이 될 수 있다고 봅니다.”

김 대표는 ‘AI 민주화’를 언급하며 향후 모바일을 넘어 다른 하드웨어 지원과 AI 모델 개발 등 사업 확장 계획을 털어 놓기도 했다. (사진=테크42)

김 대표의 말에 고개가 끄덕여지면서도 한편으로 온디바이스 AI가 가지는 기술적인 한계에 대한 의문도 들었다. 클라우드 기반 서버에 비해 성능이 제한적이라는 점과 기기 내부에서 연산이 이뤄지는 탓에 배터리 소모가 증가하는 점 등이다. 김 대표 역시 ‘한계는 명확하다’면서도 “온디바이스 AI를 위한 서비스 시장은 충분하다”고 강조했다.

“생성형 AI 등 LLM 모델들은 온디바이스 AI로 구동시키기에는 한계가 있죠. 저는 LLM 모델은 검색엔진과 같다고 생각해요. 그런 모델은 서버 안에서 정확한 결과를 자세하게 보여주는 것에 집중하는 것이 맞죠. 온디바이스를 위한 영역은 이런 LLM 모델 외에 다른 테스크라고 생각해요. 실제로 다양한 산업군에서 쓰이고 있는 AI 테스크들은 온디바이스 AI로 충분히 원활하게 구동하고 있어요. 테스크의 목적성 자체가 다른 거죠.”

차별적인 기술력과 경쟁력을 기반으로 제틱에이아이는 국내에서 케이스를 쌓고 빠르게 글로벌 진출을 하겠다는 전략을 세우고 있다. 이미 국·내외에서 멜란지에 관심을 보이는 기업들과 계약이 진행되고 있다. 김 대표는 글로벌 전략을 언급하며 “2025년이 매우 중요한 시기가 될 것”이라고 말했다.

“NDA(비밀유지계약)이 있어 구체적으로 말할 순 없지만, 몇몇 국내 기업들과 이미 계약이 체결됐고, 인도와 미국에서도 좋은 반응을 보이고 있어요. 그 중에는 iOS에만 서비스하고 있던 기업이 안드로이드로 진출하고 싶어하는 경우도 있죠. 내년에는 미국으로 비즈니스 섹터를 많이 옮기려고 생각 중입니다. 글로벌 진출을 하기에는 아무래도 미국에서 케이스를 쌓는 것이 필요하니까요. 글로벌 시장을 보면 환경적으로도 한계에 봉착한 기업들이 많아요. 한국의 경우 인프라가 잘돼 있어 인터넷을 쉽게 사용할 수 있지만 외국에는 서버가 없어 AI 서비스를 못하는 곳들이 많다는 거죠. 그런 관점으로 글로벌을 보고 있는 중이예요.”

그렇다면 정식 버전 출시와 글로벌 진출 등의 과제를 성공적으로 달성하고 난 뒤에 김연석 대표가 생각하는 제틱에이아이의 또 다른 마일스톤은 무엇일까? AI 기술은 지금도 무섭도록 빠르게 발전하는 중이고 온디바이스 AI의 한계로 지적된 제한적 성능과 배터리 문제도 어떤 방식으로 든 해결될 수 있는 것이다. 인터뷰 말미, 김 대표는 ‘AI 민주화’를 언급하며 마음 속에 품은 목표를 털어놨다.

“AI는 지금 기업들에게 종속돼 있는 상황이지만, 저희는 AI를 모든 사람들 바로 앞으로 가져오는 것을 목표로 하고 있어요. 새로운 AI 시대를 여는 거죠. 지금은 모바일에 집중하고 있지만, 향후에는 다른 하드웨어 지원으로도 확장할 수 있을 겁니다. 우선은 모바일 업계의 표준이 된 다음이겠죠(웃음). 사업적으로는 언젠가 사람들이 원할 때 언제든 가져다 쓸 수 있는 AI 모델을 제공하는 서비스도 구상하고 있습니다.”